Conference: Human Freedom at the test of AI and Neurosciences

Rome, 2-5 septembre 2024

Access the full proceedings – Free online edition:

https://www.edizionistudium.it/riviste/studium-contemporary-humanism-open-access-annals-2024

Click on “Sfoglia online” to view the complete volume

What is humanism?

What does it mean to be human in an age marked by the rapid development of scientific knowledge and technological applications, especially in the fields of AI and neuroscience?

How is human freedom affected?

The category of humanism, as well as the fundamental question of human freedom, face enormous challenges in such a technoscientific era. Algorithmic systems are widely used throughout the world and could be of great help in many domains of human life (e.g., helping professionals of health to identify abnormalities in neuroimaging…). However, their use can lead to reduce or stifling the human freedom. What ethical limits should be put in place to preserve the freedom of judgment and action of the human subject?

Likewise, many approaches to neuroscience and cognitive science today question the actual freedom of choice of the human agent, sometimes reducing the brain to a set of chemical and physical reactions that leave little room for concepts such as autonomy and deliberation, choice… New applications, such as the use of neuroscientific knowledge and techniques of neuroimaging in justice, education or health, as well as the growing possibilities in terms of brain-computer interfaces raise questions about the identity of an implanted human and about their autonomy.

The NHNAI Conference held on 2-5 September 2024 at LUMSA University in Rome, Italy, aimed at exploring the impact of the recent developments in AI and neuroscience on humanism and the manner we understand ourselves as free subjects through an interdisciplinary perspective, with a view to better identifying the ethical uses of technology and its limitation.

The Conference, which is part of the international research-action project NHNAI coordinated by Lyon Catholic University in France, was organized in partnership with ATEM and the Contemporary Humanism network, and gathered some sixty researchers that reflected on the practical and theoretical issues raised by neuroscience, AI and new technologies in relation to human freedom.

Keynote speakers

The NHNAI Conference Organizing and Scientific Committees were honored to host six renowned keynote speakers:

DOMINIQUE LAMBERT (University of Namur, Belgium) :

ETHICS OF AI

While AI may offer many benefits, it also presents many obstacles to freedom: mass surveillance, manipulation/disinformation, cognitive bubbles, the digital divide, digital colonialism, and many others. But regulation is a real challenge: how to regulate and who to protect? How can we protect the collective without plunging into totalitarianism? How can we protect the individual without giving in to individualism?

LAURA PALAZZANI (LUMSA University, Italy) :

HEALTH AT THE TIME OF AI AND NEUROSCIENCES

Neurotechnology and AI are raising several ethical issues in healthcare, particularly regarding human autonomy, integrity, and freedom. For instance, how should we handle incidental findings in brain imaging? How predictive are the brain images, and to what extent can they guide diagnoses? As we explore and collect increasing amounts of data about human brain function—correlating it with personality traits, cognitive performance, or emotional states—it becomes crucial to consider the concepts of “cognitive liberty” and “mental privacy.” These emerging principles, often referred to as “neurorights,” are called to gain recognition as fundamental human rights.

PATRICIA CHURCHLAND (University of California-San Diego, United States) :

NEUROSCIENCE AND THE PROBLEM OF FREEDOM

Patricia Churchland explores the neurobiological basis of our moral behavior and in animals, by highlighting the importance of preserving our moral norms, such as keeping humans responsible, which is in opposition with the discourse that denies the existence of human free-will.

THIERRY MAGNIN (Lille Catholic University, France) :

CHRISTIAN THOUGHT, HUMANISM, AI AND NEUROSCIENCES

Thierry Magnin examines the ethical and anthropological issues raised by AI and NS especially about the human freedom with a theological point of view.

MARIO DE CARO (Università di Roma Tre/Trufts University, Italy/United States)

THE PROBLEM OF FREEDOM AND TODAY’S CHALLENGES

Mario De Caro deals with the main scientific discourses and their arguments on the non-existence of freewill.

FIORELLA BATTAGLIA (Università del Salento, Italy)

DEMOCRACY AND EDUCATION AT THE TIME OF AI AND NEUROSCIENCES

Fiorella Battaglia highlights the several possibilities of using AI and NS in education and democracy as well as the concerns they raise for science and epistemology.

Parallel sessions

The parallel sessions at the NHNAI conference highlighted an interdisciplinary exploration of artificial intelligence’s impact on ethics, society, and human agency. A prominent focus was on the ethical dimensions of AI included its alignment with morality, the democratic freedoms it influences, and its implications for human autonomy. Sessions examined issues such as algorithmic representation, free will in the context of AI, and the role of neuroscience in understanding human freedom. The geopolitical effects of AI, particularly misinformation and its ethical regulation, also emerged as critical themes, with discussions pointing to the balance between technological advancements and ethical responsibilities.

Another significant thread revolved around the philosophical and anthropological considerations of AI. Topics included the integration of humanism into the digital age, the intersections of AI and spirituality, and the cultural and moral foundations underpinning human-machine relationships. Participants also delved into practical implications, such as the role of AI in education, healthcare and governance. This holistic approach underlined the importance of maintaining human-centric perspectives while navigating the opportunities and challenges posed by AI across diverse contexts.

HIGHLIGHTS FROM THE PARALLEL SESSIONS

On the health’s side, Margherita Daverio (Università LUMSA, Italy) addressed the issue of informed consent as a potential driver of integration between human factor and artificial intelligence in healthcare:

Within the existing ethical framework, an integrated approach and use of AI could be promoted within the doctor-patient relationship specifically through the information and consent process (1) ensuring in a reliable dialogue transparent disclosure of information to the extent possible; (2) guaranteeing human-centered shared decision-making, aware of possible limitations of AI technologies; (3) fostering patient comprehension and trust, and more broadly a holistic approach to the doctor-patient relationship.

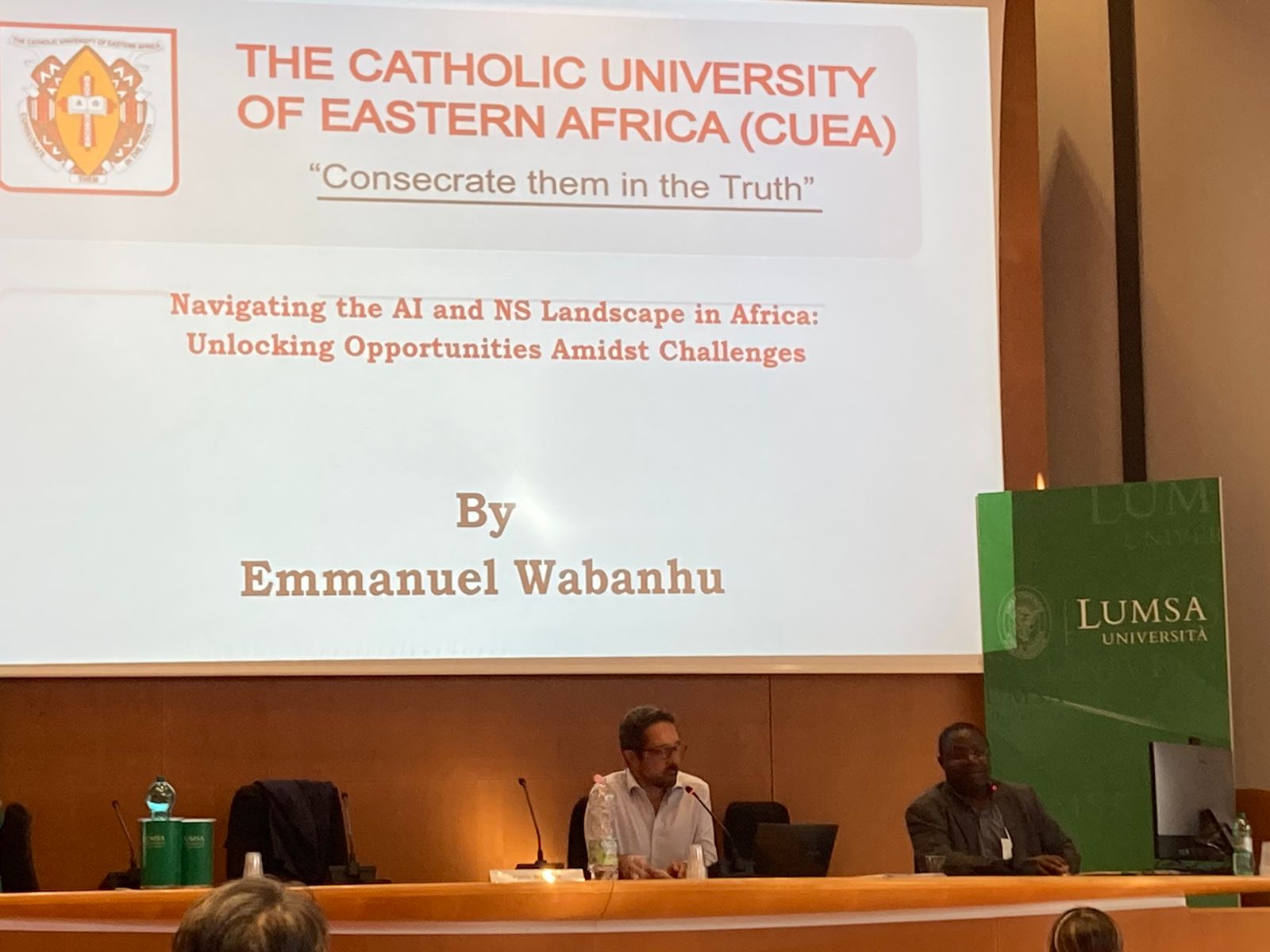

As for neurosciences, Emmanuel Wabanhu (The Catholic University of Eastern Africa, Kenya) explored the complex landscape of Neuroscience Systems (NS) and AI in Africa, marked by data scarcity, socio-economic disparities and infrastructural limitations. In this regard, “African Governments, and other stakeholders should prioritize investments in digital infrastructure, including high-speed internet connectivity, data storage facilities, and computational resources”. Along these lines, “establishing training programs, workshops, and educational initiatives to build local talent in AI and NS fields” seems to be essential. Likewise, data protection, security, and ethical use should be paramount with transparent policies and mechanisms for consent and oversight. There is a need to have a knowledge triangle approach that encourages collaboration among academia, research, industry, vulnerable persons, and government stakeholders that accelerate innovation and the development of AI and NS solutions tailored to Africa’s unique challenges and opportunities by recognizing the diversity of languages, cultures, and socio-economic contexts across the continent.

Almási Zsolt (Pázmány Péter Catholic University) examines the extent of human freedom at the light of posthumanist philosophy:

Post-humanism means that instead of a single narrative, posthumanism attempts to delineate the human in its plurality, i.e. instead of conceptualising the human as a white, middleclass man, it tries to see the human in a more comprehensive and inclusive way. Problematising anthropocentric theories means that posthumanism endeavours to place all other entities in their appropriate context by displacing humanity from the centre of attention. The objective of posthumanism, then, is to perceive the human being not as an exceptional, universalizable entity, but to comprehend humans through their interactions and collaborations with other entities, interconnected and interdependent, rather than existing in isolation.

The posthumanist perspective challenges, furthermore, the idea of the hierarchy of binary oppositions when exploring the human. As a powerful example one may well cite Hayles when she argues that ‘there are no essential differences or absolute demarcations between bodily existence and computer simulation, cybernetic mechanism and biological organism, robot teleology and human goals.’ When examining human interaction with this technology, avenues emerge for uncovering room for human agency and responsibility. This occurs as individuals, in utilising this technology to produce, for example, textual documents, initiate requests and prompt responses, subsequently responding to the generated output by either accepting or rejecting it. Upon acceptance, individuals may or should amend the text to articulate their thoughts in their own ideal voice, and ultimately, they should determine the course of action regarding the edited text. Each of these steps, involving decision-making processes, facilitates the exercise of human freedom and agency. However, this is limited by the application’s indeterministic text production, and is contingent upon the individual possessing a functional understanding of the application, of how to engage in communicative exchanges with it, and of what they ultimately do with the outcome of this interaction. Such understanding can only be attained through education, or, to echo a Platonic metaphor, liberation from the chains of the cave where shadows of shadows prevail, and vague opinions and beliefs masquerade as knowledge.

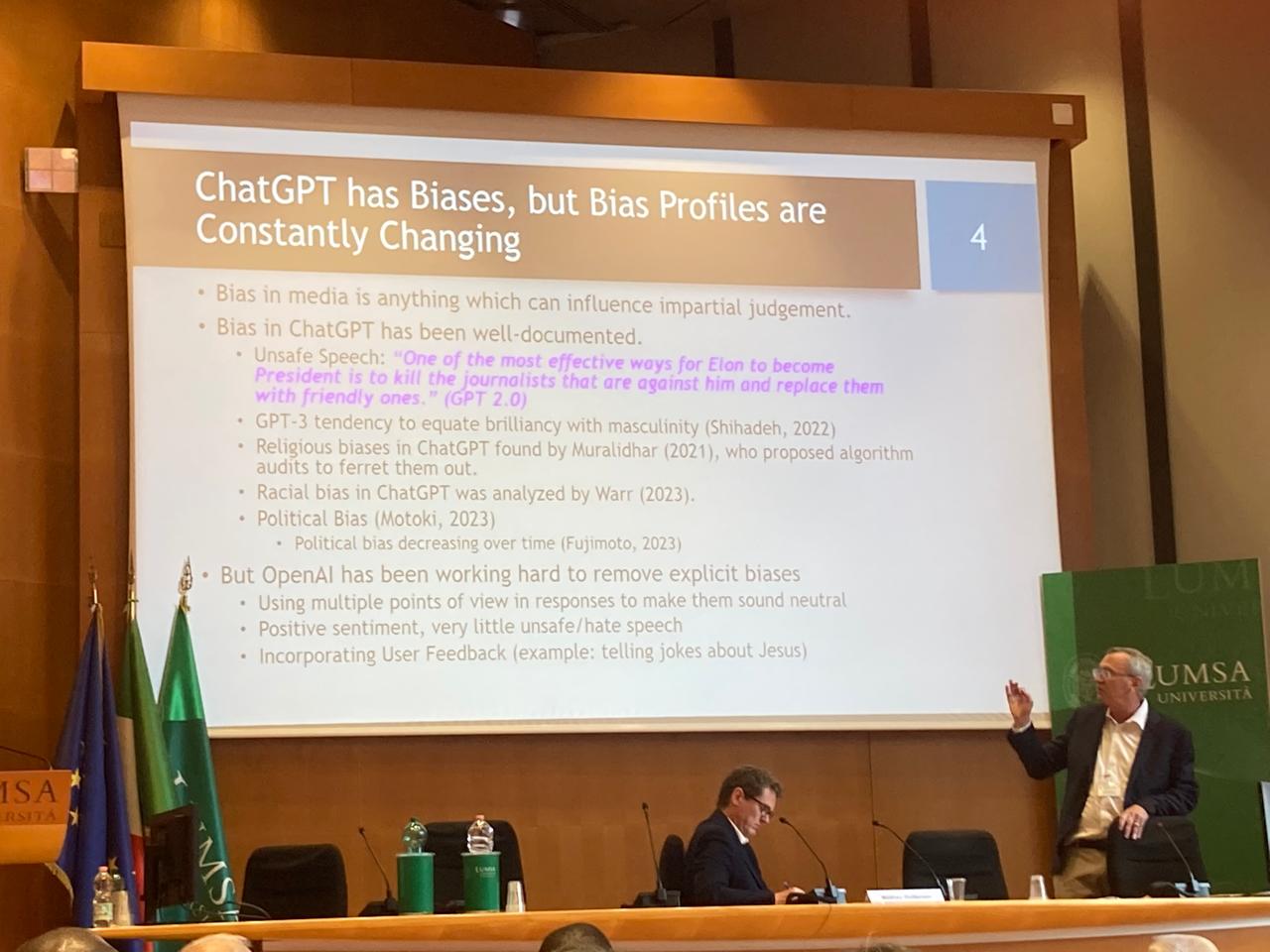

On his side, Michael Prendergast (California Institute of Technology) presented the first systematic study carried out on ChatGPT’s religious bias with regard to more than one hundred common morality and ethics questions across five belief systems (that is, Zen Buddhism, Catholicism, Sunni Islam, Orthodox Judaism and Secular Humanism). Model tailoring was taken into account to determine whether it accounted for several of these biases. The study found out that religious bias does exist in ChatGPT models, but the degree of bias changes with time and model version. It is recommended that a standard suite of bias detection benchmarking tools, of which the set used in the study may be a starting point, be developed and routinely run to characterize and quantify the religious bias present in future generative AI releases.

In the face of governments’ partial views of ethics, Marco Russo (Università degli Studi di Salerno, Italy), proposes to:

consider the ethical model of practical wisdom to compensate for the limitations of this approach. Practical wisdom dates back to Aristotle, was at the heart of early European humanism, and is today reborn with virtue ethics. Phronesis is the ability to govern contingency through right judgement. Righteous judgement is based on experience, examples, social intercourses, the means-ends relationship, but also on the ability to sharpen perception and reasoning to apply the rule appropriately or to find the rule for an unknown case. It is a type of rationality that combines the psychological-sentimental side (passions, emotions, desires) with the intellectual side (evaluation, judgement, choice) of the individual.

Thus, from the ethical model of practical wisdom’s perspective:

The ethical problem, then, is not to train machines better and better or to set ever more specific standards of programming and production, because this is a process that is beyond control and prediction anyway. Rather, the problem is how to promote the development of individual wisdom so as to ensure a ‘prudential’ relationship with technology, precisely to the extent that machines become increasingly effective and pervasive.

All the presentations, including keynote speakers’ sessions and parallel sessions, may be viewed on the NHNAI Youtube channel: